These TEACHINGS are millenia old. Jeshewua, The Dead Sea Scrolls, Apocrypha, The Nag Hammadi (These are the Words said in Secret by Jesus/Jeshewua/Yeshua, recorded as instructed by We, His Apostles, including the Gospel of Mahari/Mary, the Magdalene), The Atlanteans, those who built the MOAI, New Zealand's Māori, The Native American Indians, Mayans, the Aztecs, Dogon of Africa, Tibetan Teachings, Nepalese, Buddha Followers, Krishna, Hanuman, Akhenaten, The 3 Books of Enoch, Ezekiel, The Mahabharata of Krishna-Dwaipayana Vyasa, The Bhagavad-Gita, The Gnostic Essene Teachings, Democretus and Anaxagoras, Plato, Pliny, Plutarch, Aristotle, Copernicus, Da Vince, Zulu Shaman, Credo Mutawa, Ram Dass, Sheldrake, Jung, Feynman. These are the Masters of GUARDIAN Law of One Divine Science.

The Guardian Groups mission directive is rehabilitation of the hologram, repairing its energetic architecture (DNA), embodying the Ascended Human Proto form, supplying informational context on Ascension dynamics and Eco-politic E.T. Agendas and reeducating both human and non-human's to be congruently aligned to the Universal Law of One. Freedom, Fraternity, and the Sovereign Right of All Beings to be a Knower of God Source is protected by the Collective Unity Vow shared among our Universal Guardian Groups.

We believe in supporting Freedom of Information to be made available to the public to become informed, educated and aware of the current shifts impacting our planet, in order to make informed decisions through the free access to this Ascension material based upon the Law of One principles. This body of Ascension material is designed to support the higher development of personal and planetary Consciousness to progress an evolutionary model of Human Rights, benevolence.

The Ascension Material and the resources available and quoted throughout these posts are a combination of Complete Akashic Record Access, 'electronic data transmissions' from the Akashic Records, years of research and application, as well as Guardian Materials compiled, edited and adapted from various Guardian Alliance Resources and Groups. All this material comes from the original Essene Teachings, the Nag Hammadi, the Gnostic Texts, The Book of Ezekiel, The Books of Enoch and The Emerald Order Elohim and Ascended Yanas Masters, The Oraphim and Sirian High Councils' Maharata Dispensations and contacts & communications with certain chosen individuals to relay our messaging and Creation Mechanics teachings of Quantum Unity Theory and the Law of One, from Akhenaten through to Maharaji Baba to Ram Dass to Carl Jung's writings and modern day philosophers.

We have also included RA & Q'uo Sessions Carla & Jim, Voyagers 1 & 2 material from Diana/Anna Hayes/Ashayana Deane/E'yasha Ashayana, some of the explanations and mantras that Lisa Renee added when she copied Ascension Dictionary.com and all Anna's Voyager 1 & 2 material.

ALL of it is here so YOU CAN DECIDE WHAT IS REAL AND WHAT IS DISTORTION. This site exists as simply a portal of methods and teachers for YOU to access as YOU ascend and search on your spiritual journey.

Louis S. Luzzo, Sr.

I AM but a Way-Shower to the truth. In certain Teachings and as editor, I give my impressions and my personal experiences and thoughts only as clarification and possible validation of the material, nothing more. I do not and will not ADD any teachings or dispense any new material BECAUSE THERE IS NO NEW MATERIAL. We are here to clean up and clarify The Teachings.

|

| Louis S. Luzzo, Sr |

We choose no censorship, rather The Guardian philosophy is to encourage you to pursue these truths with your heart and take from these teachings what resonates best for you. We are confident that the truth will find you if you seek it. Free Will is Paramount. All these teachings have been compiled, edited, reassembled and then adapted here in a up-to-date summary form, for the sole purpose of re-introducing you, not only to the material, but the sources, platforms and people who offered these teachings and insights and, to correct and redirect the Guardian Alliance Teachings back to the Original dispensations and Law of One Teachings, sans the personal distortions and incorrect definitions now abounding over the last few years by individual Speakers platforms.

We will continue to fulfill our mission as Guardian Speakers and Akashic Scribes and bringing the Teachings back to the masses and population of Earth. The Emerald Order Breneau freely offer this material and the OWNERS are the sentient beings of this Universal Time Matrix.

Krystal Rap and all the resources and links within, "is dedicated to all awakening Starseeds, Indigos and Light-workers that have the spiritual mission to evolve on the Earth as Guardian Stewards in order to help participate and build the required consciousness structures this planet and humanity will require in the coming Age." We at Krystal Rap encourage ALL of you so inspired to help share and be a Way Show-er of Source as well, to join in getting this messaging out to the world. There is no fee, nor club, nor registration, nor exclusion via membership in order to access and benefit from this material. As a sentient expression of Source, it is your Inheritance, and we are ONLY here as the way show-ers of that Inheritance, as Guardian Speakers. Please share it with all you can.

"Through us, not from us." The more Guardians we have sharing these teachings and true histories, the faster we can bring about the positive changes needed to rid the planet of the Negative Alien Agenda and help us bring about the positive Ascension of Humanity in the New Age. The message is what counts most, not the messengers. The Elohim bless a rare few of us with this message, and it is our privilege as chosen Guardian Speakers at this time to share it with you all.

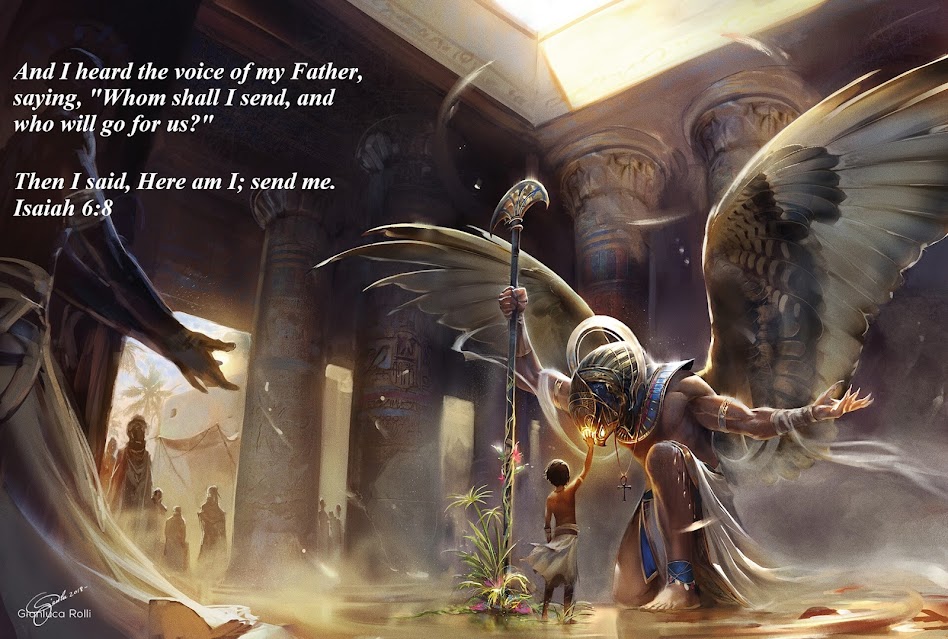

We, like Guardian Jeshewua, speak The Word, and if we profess Christ Consciousness, we must do so neutrally, with no fear, no doubt, no pointing to our deeds or tribulations. We are all important and needed in this Grand Plan of our Infinite Creator and the Law of One. Though Speakers be few, there are many light working Guardian Stewards of the Law of One messaging and ALL are encouraged to disseminate this material, sharing it socially or linking to it in your websites. We encourage sharing, with the exception of commercial distribution.

We link directly to them, not only here but in our resources section, as we do with all our source material. We, the Guardian Alliance do not censor, regardless of distortion or non distortion, the information and interpretations of our Teachings in the good faith offering of full transparancy and we hope that you use discernment in separating the two.

This Site is made free and possible because many people like us do not believe that Ascension Material should be exclusive. This site is represented as an “Information Commons” and exists as an Free, open source, educational reference, such as a physical library or online community, which exists to produce, conserve, and preserve information for current and future generations of humanity.

When we say KrystalRap.com™ is a Free Wiki, we refer to their being no obligation for you as the user of this site, in either cost nor membership. While we offer this content and, links herein freely, it is however protected under copyright & trademark law and as such,, attribution of the Source materials, Speakers, Authors and linked websites represented under the umbrella of Krystal Rap™ who offer these teachings is required in good faith. We ask if so inclined to donate, as every $1 helps me keep this site commercial and ad free.

I wish you the peace, love and light of our Infinite Creator,

Adonai,

Louis